I'm an avid self-hoster and have had n8n setup for a while but not used it very much. That is until now...

This is a very quick post about how the automated notifications work on my blog with n8n. Probably quite niche, but hopefully useful for some!

I really like static sites, they're super fast and customisable while giving you the flexibility of using React or other frontend components without any JavaScript getting shipped to the user (unless you want it of course).

However, having had a static site in the past, the main way of authoring is with markdown files, which is fine but I never found a decent editor that I enjoy writing in. So I use Ghost for this website's backend, it's got a fantasic editing experience. I recently switched my website to use a static site Astro frontend which pulls posts from Ghost rather than using Ghost directly. I'm hoping the best of both worlds.

You can check out my site's source code here by the way.

Ghost Webhook

Ghost allow you to create a webhook (docs here) which can get triggered when something happens, in my case I'm only interested in when a post is published.

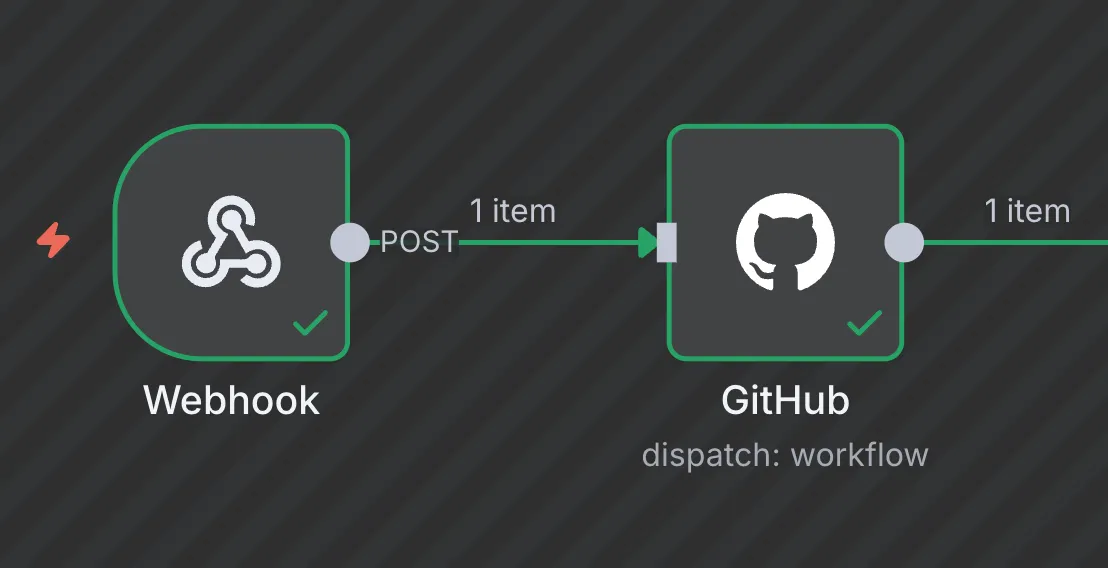

In n8n, I have a webhook receiver which is linked up to Ghost, so it triggers when a post is published. The payload contains some info about the post, such as the title and URL. Great!

Before I had Astro, it was easy enough to just use this trigger to create a Bluesky post.

Auto building

For my site, I host it with Cloudflare Workers which is like Cloudflare's serverless functions offering. I have a mostly a static site, however the contact form, for example, uses some server-side code. Cloudflare recommend using Workers over Pages (which is just static site hosting) now, even for completely static sites.

Cloudflare automatically build my site whenever I commit to the repo, but no commits are made when I publish, so the site doesn't update when I publish. Sadly Cloudflare doesn't give you a webhook like they do for Pages for triggering builds manually.

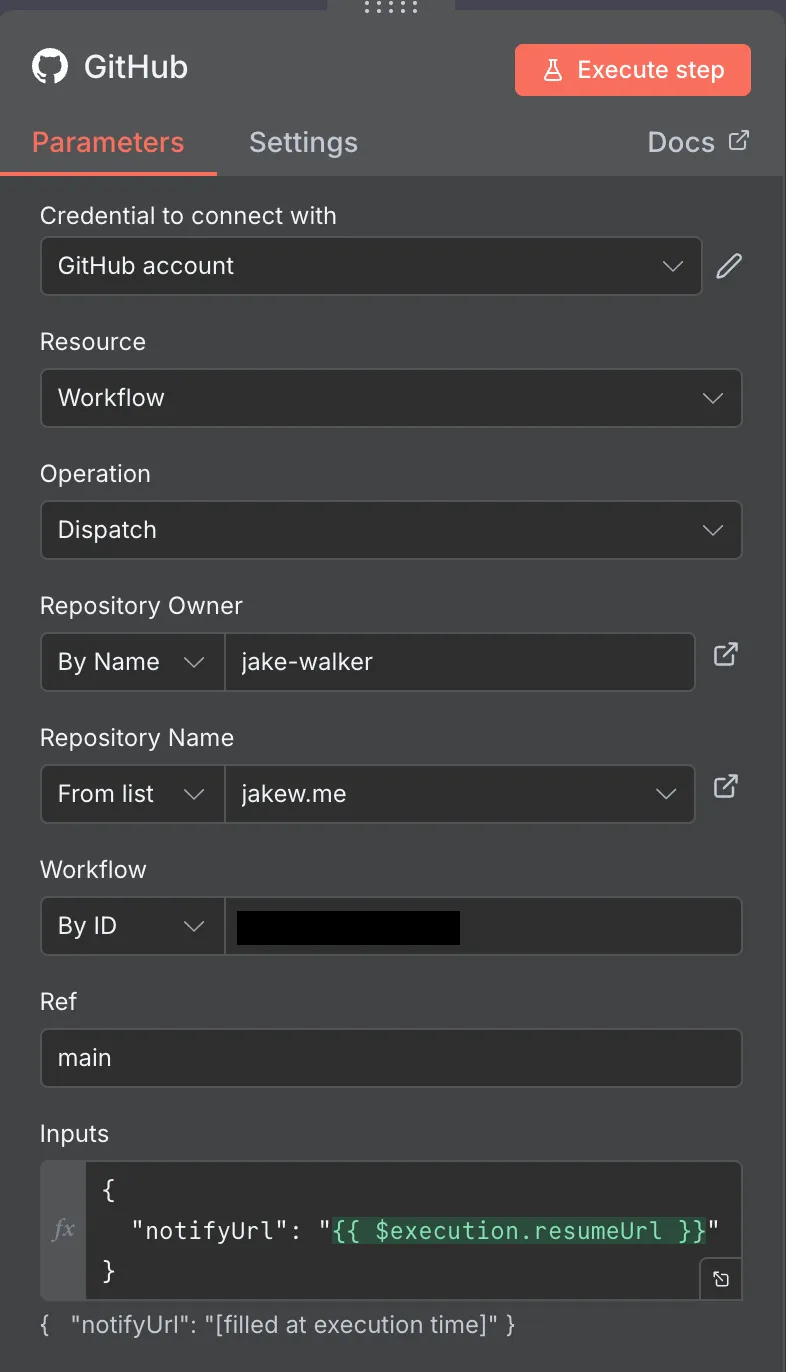

To resolve this, I setup a GitHub Actions workflow in my website repo (here) which is triggered with a workflow_dispatch event - essentially a manual trigger. Cloudflare build for me normally, so I don't want to trigger this build for every push although I'll probably combine them in the end for simplicity but that's a job for another day.

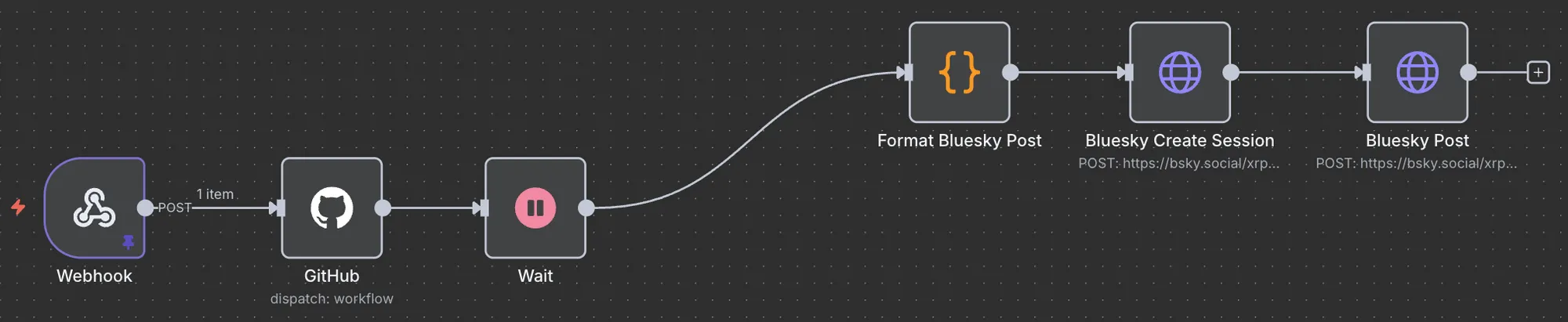

So once my n8n workflow receives the new blog post, it will now kick off the GitHub workflow:

Waiting for the build

The issue now is that the build can take a few minutes and I want to wait for the site to be live (or at least nearly live) before sending the notification. Luckily n8n has a cool "wait" node which can pause execution until a webhook has been received. This wait webhook is separate to the initial webhook and is unique for the execution.

When the workflow is dispatched, the wait webhook is passed in as an input to the workflow.

I have the wait node configured to only wait for up to an hour, which should be plenty of time, just in case the workflow fails and never calls back.

Sending the notification

Once the build completes, the Bluesky notification part is run. The "format post" node runs some JavaScript to create a JSON object to send to Bluesky as there's a bit of processing required to get it into the right format. This node can reach back to grab context from the initial webhook for all the post information.

The "create session" node makes a call to the Bluesky API to create a session using my auth token, then the final node creates a new post using the formatted post and the newly created session token.

There's some great docs from Bluesky on how to create posts programatically which I based my n8n stuff off. Here's the script that builds up the Bluesky post object:

function parseUrls(text) {

const spans = [];

// partial/naive URL regex based on: https://stackoverflow.com/a/3809435

// tweaked to disallow some training punctuation

const urlRegex = /[$|\W](https?:\/\/(www\.)?[-a-zA-Z0-9@:%._+~#=]{1,256}\.[a-zA-Z0-9()]{1,6}\b([-a-zA-Z0-9()@:%_\+.~#?&//=]*[-a-zA-Z0-9@%_\+~#//=])?)/g;

let match;

while ((match = urlRegex.exec(text)) !== null) {

spans.push({

start: match.index + match[0].indexOf(match[1]), // Adjust start based on full match

end: match.index + match[0].indexOf(match[1]) + match[1].length, // Adjust end

url: match[1],

});

}

return spans;

}

function truncateToTwoSentences(text) {

if (!text) {

return ""; // Handle null or undefined input

}

const sentences = text.split(/[.?!]/).filter(s => s.trim() !== ""); // Split into sentences

if (sentences.length <= 2) {

return text.trim(); // Return original if fewer than 2 sentences, but trim whitespace

}

return sentences.slice(0, 2).join(". ") + "."; // Join the first two with ". " and add a final "."

}

// loop over all the webhook inputs (probably just one)

return $('Webhook').all().map((item) => {

console.log(item);

// replace the url

const newUrl = item.json.body.post.current.url.replace("ghost.jakew.me", "jakew.me");

const content = `I've just published a new blog post \"${item.json.body.post.current.title}\" - ${newUrl}`;

// create the actual post object

return {

"json": {

"post": {

"$type": "app.bsky.feed.post",

"text": content,

"createdAt": new Date().toISOString(),

"embed": {

"$type": "app.bsky.embed.external",

"external": {

"uri": newUrl,

"title": item.json.body.post.current.title,

"description": truncateToTwoSentences(item.json.body.post.current.excerpt)

}

},

"facets": parseUrls(content).map((u) => ({

"index": {

"byteStart": u["start"],

"byteEnd": u["end"],

},

"features": [

{

"$type": "app.bsky.richtext.facet#link",

"uri": u["url"],

}

],

}))

}

}

}

});Wrap Up

The final result is a post like this:

I've just published a new blog post "Light Up Festival Bucket Hat" - https://jakew.me/light-up-festival-bucket-hat/

— Jake Walker (@jakew.me) 23 August 2025 at 23:49

[image or embed]

Yes, this is a little overkill, but it turned out to be quite a cool little project to create with n8n! Maybe in the future I'll add Mastodon and other platforms.

I'd highly recommend having a play around with n8n, you can do some crazy complex workflows - I've seen one online where people have made fully automated AI slop posting machines.